Migrating internationalization files

During my Ph.D. migration project, I considered the migration of several GUI aspects:

- visual

- behavioral

- business

These elements are the main ones. When perfectly considered, you can migrate the front-end of any application. But, we are missing some other stuff 😄 For example, how do you migrate i18N files?

In this post, I'll present how to build a simple migration tool to migrate I18N files from .properties (used by Java) to .json format (used by Angular).

I18N files

First, let's see our source and target.

As a source, I have several .properties files, including I18N for a Java project.

Each file has a set of key/value and comments.

For example, the EditerMessages_fr.properties is as follow:

1########## 2# Page : Edit 3########## 4 5pageTitle=Editer 6classerDemande=Demande 7classerDiffusion=Diffusion 8classerPar=Classer Par

And it's Arabic version EditerMessages_ar.properties

1######### 2# Page : Editer 3######### 4 5pageTitle=تحرير 6classerDemande=طلب 7classerDiffusion=بث 8classerPar=تصنيف حسب

As a target, I need only one JSON file per language. Thus, the file for the french translation looks like this:

1{ 2 "EditerMessages" : { 3 "classerDemande" : "Demande", 4 "classerDiffusion" : "Diffusion", 5 "classerPar" : "Classer Par", 6 "pageTitle" : "Editer" 7 } 8}

And the Arabic version:

1{ 2 "EditerMessages" : { 3 "classerDemande" : "طلب", 4 "classerDiffusion" : "بث", 5 "classerPar" : "تصنيف حسب", 6 "pageTitle" : "تحرير" 7 }, 8}

To perform the transformation from the .properties file to json, we will use MDE.

The approach is divided into three main steps:

- Designing a meta-model representing internationalization

- Creating an importer of properties files

- Creating a JSON exporter

Internationalization Meta-model

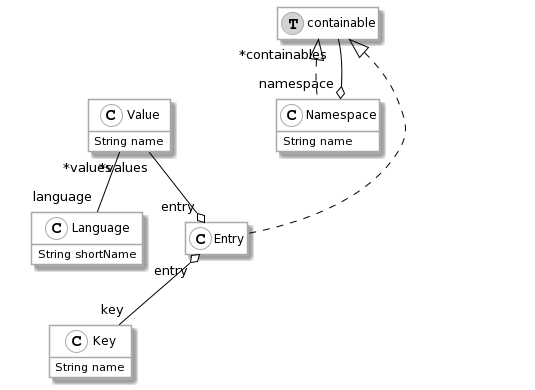

I18N files are simple. They consist of a set of key/values. Each value is associated with a language. And each file can be associated with a namespace.

For example, in the introduction example, the namespace of all entries is "EditerMessages".

I designed a meta-model to represent all those concepts:

Once the meta-model is designed, we must create an importer that takes .properties files as input and produces a model.

Properties Importer

To produce a model, I first look for a .properties parser without much success.

Thus, I decided to create my own parser.

Given a correctly formatted file, the parser provides me the I18N entries.

Then, by iterating on this collection, I build an I18N model.

I18N parser

To implement the parser, I used the PetitParser2 project. This project aims to ease the creation of new parsers.

First, I downloaded the last version of Moose, and I installed PetitParser using the command provided in the repository Readme:

1Metacello new 2 baseline: 'PetitParser2'; 3 repository: 'github://kursjan/petitparser2'; 4 load.

In my Moose Image, I created a new parser.

To do so, I extended the PP2CompositeNode class.

1PP2CompositeNode << #CS18NPropertiesParser 2 slots: { }; 3 package: 'Casino-18N-Model-PropertyImporter'

Then, I defined the parsing rules. Using PetitParser2, each rule corresponds to a method.

First, start is the entry point.

1start 2 ^ pairs end

pairs parses the entries of the .properties files.

1pairs 2 3 ^ comment optional starLazy, pair , ((newline / comment) star , pair ==> [ :token | token second ]) star , (newline/comment) star ==> [ :token | 4 ((OrderedCollection with: token second) 5 addAll: token third; 6 yourself) asArray ]

The first part of this method (before ==>) corresponds to the rule parsed.

The second part (after ==>), to the production.

The first part tries to parse one or several comment.

Then, it parses one pair followed by a list of comment, newline, and pair.

This parser is clearly not perfect and would require some improvement. Nevertheless, it does work for our context.

The second part produces a collection (i.e. a list) of the pair.

Building the I18N model

Now that we can parse one file, we can build a I18N model.

To do so, we will first parse every .properties file.

For each file, we extract the language and the namespace based on the file name.

Thus, EditerMessages_fr.properties is the file for the fr language and the EditerMessages namespace.

Then, for each file entry, we instantiate an entry in our model inside the namespace and with the correct language attached.

1importString: aString 2 (parser parse: aString) do: [ :keyValue | 3 (self model allWithType: CS18NEntry) asOrderedCollection 4 detect: [ :entry | 5 "search for existing key in the file" 6 entry key name = keyValue key ] 7 ifOne: [ :entry | 8 "If an entry already exists (in another language for instance)" 9 entry addValue: ((self createInModel: CS18NValue) 10 name: keyValue value; 11 language: currentLanguage; 12 yourself) ] 13 ifNone: [ 14 "If no entry exist" 15 (self createInModel: CS18NEntry) 16 namespace: currentNamespace; 17 key: ((self createInModel: CS18NKey) 18 name: keyValue key; 19 yourself); 20 addValue: ((self createInModel: CS18NValue) 21 name: keyValue value; 22 language: currentLanguage; 23 yourself); 24 yourself ] ]

After performing the import, we get a model with, for each namespace, several entries. Each entry has a key and several values. Each value is attached to the language.

JSON exporter

To perform the JSON export, I used the NeoJSON project. NeoJSON allows one to create a custom encoder.

For the export, we first select a language. Then, we build a dictionary with all the namespaces:

1rootDic := Dictionary new. 2 (model allWithType: CS18NNamespace) 3 select: [ :namespace | namespace namespace isNil ] 4 thenDo: [ :namespace | rootDic at: namespace name put: namespace ].

To export a namespace (i.e., a CS18NNamespace), I define a custom encoder:

1writter for: CS18NNamespace customDo: [ :mapper | 2 mapper encoder: [ :namespace | (self constructNamespace: namespace) asDictionary 3 ] 4 ].

1constructNamespace: aNamespace 2 | dic | 3 dic := Dictionary new. 4 aNamespace containables do: [ :containable | 5 (containable isKindOf: CS18NNamespace) 6 ifTrue: [ dic at: containable name put: (self constructNamespace: containable) ] 7 ifFalse: [ "should be an CS18NEntry" 8 dic at: containable key name put: (containable values detect: [ :value | value language = language ] ifOne: [ :value | value name ] ifNone: [ '' ]) ] ]. 9 ^ dic

The custom encoder consists on converting a Namespace into a dictionary of entries with the entries keys and their values in the selected language.

Perform the migration

Once my importer and exporter are designed, I can perform the migration.

To do so, I use a little script.

It creates a model of I18N, imports several .properties file entries in the model, and exports the Arabic entries in a JSON file.

1"Create a model" 2i18nModel := CS18NModel new. 3 4"Create an importer" 5importer := CS18NPropertiesImporter new. 6importer model: i18nModel. 7 8"Import all entries from the <myProject> folder" 9('D:\dev\myProject\' asFileReference allChildrenMatching: '*.properties') do: [ :fileRef | 10 self record: fileRef absolutePath basename. 11 importer importFile: fileRef. 12]. 13 14"export the arabian JSON I18N file" 15'D:/myFile-ar.json' asFileReference writeStreamDo: [ :stream | 16 CS18NPropertiesExporter new 17 model: importer model; 18 stream: stream; 19 language: ((importer model allWithType: CS18NLanguage) detect: [ :lang | lang shortName = 'ar' ]); 20 export 21]

Ressource

The meta-model, importer, and exporter are freely available in GitHub.